Predictive modeling: Linear Regression. Machine Learning vs Data Science

Here are learnings and notes from one of the classes of Data Science course I am attending. They are very technical and a little unorganised, i will clean it up further as i get time. Linear regression is the most popular supervised learning technique or the most popular data science technique in general.

Start of notes:

Linear Regression

X is usually independent variable

Y is usually dependent variable (it’s value changes as the value of X changes..assuming it’s a dependent variable )

What is X and what is Y is based on the question we are asking and how we want to plot X vs Y

Correlation and causation : correlation is a statement of variables having relation with each other for a certain data

Causation is extension of it — we are saying, they may be related, but also - if one is changed, it would cause the other variable to also change. Whether this is true or not may seem easy for common sense if there is a correlation, but we may not have data for that…we may have data just showing correlation.

Hence, correlation does not imply causation. It’s possible changing a could be causing change b..but it’s also possible there is another variable c that could be causing change of both a and b, unless there is data to prove or experiment is done - we cannot conclude that correlation would also lead to causation

In practical example, data of weight and mileage may show negative correlation that if if weight of car is more, mileage is less, based on a dataset.. but this cannot conclude that if you increase the weight, it would definitely decrease mileage but that data is not given or experiments are not conducted, it could be that a third variable that, for example, is torque of the engine that may have impact on both these. It could get proven to be true eventually, for many cases, but we cannot conclude on that without data…it would only be an assumption if we do it, not a proven thing.

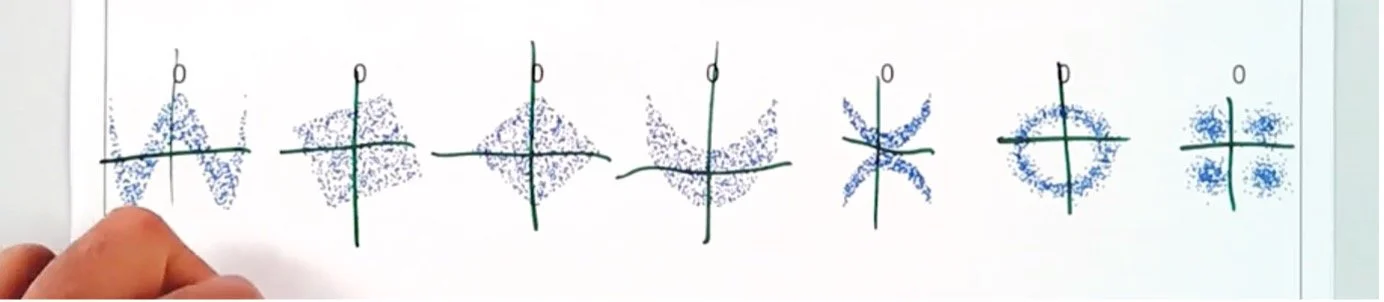

Correlation is a measure of linear relations. If you plot x and y on scatterplot and it’s a circular pattern, the 2 variables are still related as given a value of x you can find value of y easily, the relationship is circular but Correlation formula would given the value of correlation as 0 here because data is equally distributed around the axis in all quadrants.. hence, it only tells there is no LINEAR correlation that exists. Correlation might exist, but it is not linear.

The mean absolute error of a model is the mean of the absolute values of the individual prediction errors and is not differentiable at 0. This makes minimizing it a problem. The mean squared error (MSE) is the mean of the squared values of the individual prediction errors and is differentiable everywhere. So, it is minimized to find the best-fit hyperplane in linear regression.

The R-squared value tells us the proportion of variance of the dependent variable explained by the model. For an R-squared value of k, 100 times k percent of the variance is explained. So, for an R-squared value of 0.85, 85% of the variance is explained by the model.

Correlation numbers based on different graph visualisations

The last row of above is all 0 Correlation because data points are equally distributed in 4 different quadrants

For a flat line, the correlation is 0 / 0 or undefined . You can always determine Y given x, because it’s same value of Y always for any X. But if you are given Y, you cannot determine X. So there is a strong correlation on one variable to another, but the opposite is not true.

We are trying to find out if the variance measurement after the best fit line is found between variables is better than the original variance between the value and its mean before we had the best fit line (or equation… a + bx). R square finds that comparison. If the value is 1, that is the best fit graph could perfectly explain relation between each variable and predict value of x vs y and hence the variation of denominator would be 0…1-0 = 1. If the best fit graph had still the same variable as before that then both numerator and denominator would be same and Rsquare would be 0. This means, we explained nothing.

Hence, Rsquare tells fraction of the variance in y explained by the regression

Simple Linear regression is single-variate and hence has just one independent feature. Whereas multiple Linear Regression is used on multi-variate data samples.

The R-squared value increases when we add a new feature, but the adjusted R-squared value may increase or decrease based on whether the addition of features adds value or not.

The decrease in adjusted R-squared value signifies that the newly added variable does not add value to the model performance. And hence should not be included

Categorical variables represent categories while the best-fit line needs to be fit on numerical values. So, they are encoded before being used in linear regression. Most common encoding is one hot encoding where each value of categorical variable is converted to a column and the value for a given row for that column would be 1 for the row that categorical variable appears, and for other rows that columns would be 0.

The very complex model learns the noise of data along with the useful information, and hence cannot perform on test data.

Cross-validation uses splits the training data to generate multiple smaller train-test splits, which are further used to check the model's performance.

Linear Regression focuses on finding the linear relationship between the independent and dependent variables, and this linear nature makes the interpretation of output coefficients easy. It is not computationally expensive but is heavily affected by the presence of outliers.

Evaluating a model on the test data is a core part of building an effective ML model as the training performance alone cannot give a clear picture of how the model will perform on unseen data.

While it is computationally efficient and interpreting coefficients is easy, Linear Regression assumes that the input features are independent, which might not necessarily be true for all real-world scenarios.

Force-fitting in machine learning means that using training data - the model may become very closely attached to that specific set of data and not generalise it enough to give accurate results/predictions on a test data that’s different from a training data. That’s why keeping test data separate is important to see how the model is behaving using the training data.

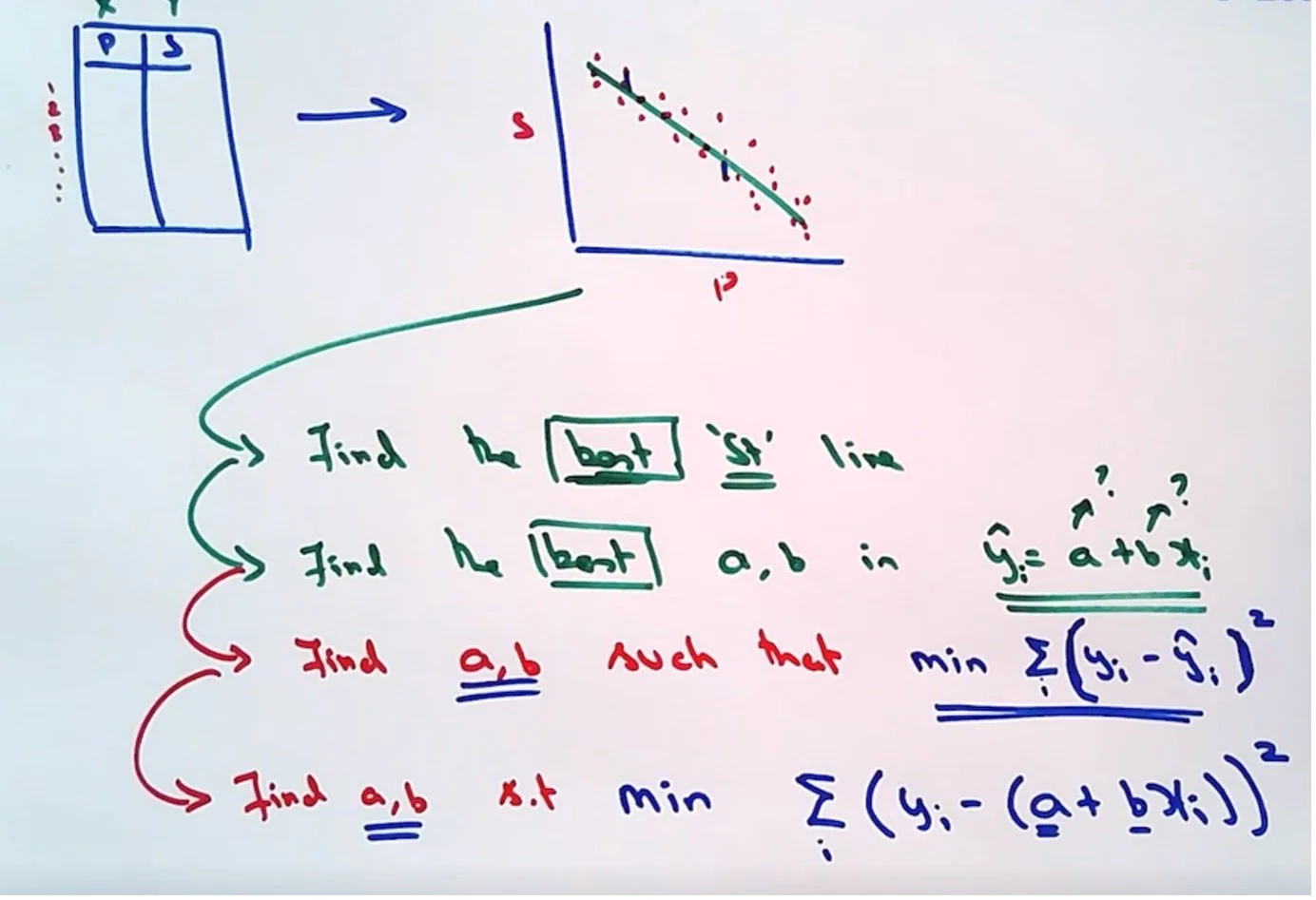

Here is a summary of linear regression problem - its about finding the best straight line that can describe the data, which is to find y (hat) that is the predicted value that has minimum difference from observed value (y), that is -to find a and b in the equation (y hat = a + bx) that leads to minimum value of the square of difference is minimised

In simple linear regression, the best-fit line is the one for which the sum of squared differences between actual and predicted values is minimum.

Data Statistician / Data Science VS Machine Learner / Machine Learning

Both a statistician and a machine learner want to learn from data by building predictive models. What they do after building the models is where they differ.

Here is how a statistician thinks about a and b, and it starts with making an assumption upfront about a (assumed) TRUTH that all data is generated by the equation that is Data Generating Model (DGM).. That DGM is having alpha and beta and the reason they are not same as a & b (for predicting values) is because of noise represented as epsilon. The noise is also called as residual. a and b are called linear regression coefficients. Based on that, the statistician can asked statistical inference type questions.. like .. what can we say about alpha and beta, maybe we can give it a range with 95% confidence (CI - confidence interval), could beta really be zero (hypothesis testing can be done) . The don’t need to separate in sample and out of sample data (or training vs test data) because they are not concerned about over-fitting as it’s assumed that data is coming from linear regression equation only so they are not coming up with a model that might over-fit into the data while trying to come up with a perfect model. This could largely come as a field of mathematics. Statistics has been with us for 3-4 centuries.

A Machine Learner or ML scientist does NOT ASSUME that data is coming from a linear regression model. No Assumption is made on Data Generating Model (DGM). It keeps trying to find that the data could be coming from a different equation, or from a decision tree, or from a neutral network and so on. It can do this because it believes a machine can help find the right model and we don’t need to make assumptions. Statistics from age old times did not have access to computers, and hence to solve problems - they had to make assumptions because all of the work was done manually.

Machine learning has more degrees of freedom because they have more parameters and are more likely to be able to fit the data better, so has better abilities to model any given data. Hence more complex models are likely to fit the data better. Hence machine learners have to be a lot more worried about what over-fitting is, how to be careful about over-fitting , make sure to chop off data between training and testing data. ML models are almost guaranteed to fit the training data better but more complex models are likely to not fit the testing data better because of over-fitting, that is, more complex models are likely to capture the data from the noise and the noise does NOT repeat itself from training to test. So if you take a more complex model from trained data to test data, it’s not going to do well. This could largely come as a field of computer science (largely algorithmic approach is taken). Machine learning has been with us for 3-4 decades

The terms used by Data Scientists vs ML scientists also vary a bit for the same thing.

1- Fit a model vs Learn (in ML)

2- Parameter vs weight

3- co-variate vs feature

Many a times, it is also believed that data statistician are more interested in interpretation and ML scientists are more interested in prediction. In many ways its true because ML experts are ok to use much complex models and neural networks that can predict well but you can’t really interpret how they predicted it (explainability) but interpretability is gaining a lot of popularity in machine learning now a days. Statisticians are, per many experts, as interested in prediction as they are in interpretation but because they tend to gravitate towards simpler models for prediction, it’s just naturally easier to interpret. Machine learner cannot make statistical inference about the data because there was no assumption to begin with, so they can’t hypothesise what alpha and beta (assumed values that exactly fit the model) could be etc. As machine learners do not assume a DGM, they cannot make statistical inferences about the data.

Statisticians assume a data-generating model before beginning the analysis, allowing them to make powerful statistical inferences.

The linear regression coefficients corresponding to the best fit straight line will give us a very close approximation of the actual data-generating model.

Machine learners do not assume a DGM, so they should not stop after checking only a linear model and should explore non-linear models too.

Statisticians are mostly interested in determining the performance of a model on the in-sample data, while machine learners have to check the model performance on the out-of-sample data too to compare multiple models and check for overfitting.

Linear Regression Assumptions

Linear regression assumptions : Linearity, Independence, Normality, Homoscedasticity

The difference between the actual and predicted values are called residuals, and they should be normally distributed in the case of linear regression.

The assumption of homoscedasticity says that the residuals of linear regression should have equal variance.

When doing multiple linear regression, the predictor variables should be independent of each other to ensure that there is no multicollinearity.

If the independent variables are linearly related to the target variable, then the plot of residuals against the predicted values will show no pattern at all.

If the percentile points of two distributions lie on a diagonal 45° straight line, they are said to be close to each other.

In the case of heteroscedasticity, the residuals of linear regression form a pattern (funnel-shaped or others).

When two predictor variables are correlated, then their combined effect is considered and no problems arise for predictions. However, interpretation becomes a challenge as it might not make sense for one or more variables.